-

Magazine No. 36

Uncanny Valley

-

No. 36 - Uncanny Valley

-

page 02

Cover

Uncanny Valley

-

page 03

Editorial

Uncanny Valley

-

page 04 - 05

Uncanny Valley?

When empathy turns to revulsion

-

page 07

The Good Thing About Robots

New animated film from ECAL

-

page 08 - 13

A Dog’s Life

Robots that were made to be loved

-

page 14

Nothing Can Harm Them

Synthetic bees by Greenpeace

-

page 15 - 16

The Eye of the Swarm

Superflux’s Drone Aviary

-

page 17 - 25

Machines of Loving Grace

The city as a distributed robot & the omnipresent intelligence of data networks

-

page 27

In our own image

Law and order in robotics

-

page 28 - 29

The Bionic Man

Hugh Herr, head of Biomechatronics at MIT

-

page 30 - 35

A Kind Of Magic

A conversation about losing control over our own technology

-

page 36 - 37

Cyber Sculptors

Kram/Weisshaar’s Robochop project

-

page 38 - 45

The Otherness of the Machine

Jan Willmann from ETH Zurich on robotics in architecture

-

page 46

Tug Bugs

Microbots with superstrength

-

page 47 - 52

Matter Design's

Best of commercial robotic fabrication innovation

-

page 53

Forever Alone

One small step for a robot...

-

page 54 - 57

In the Photo Booth with...

Katarzyna Krakowiak

-

page 58

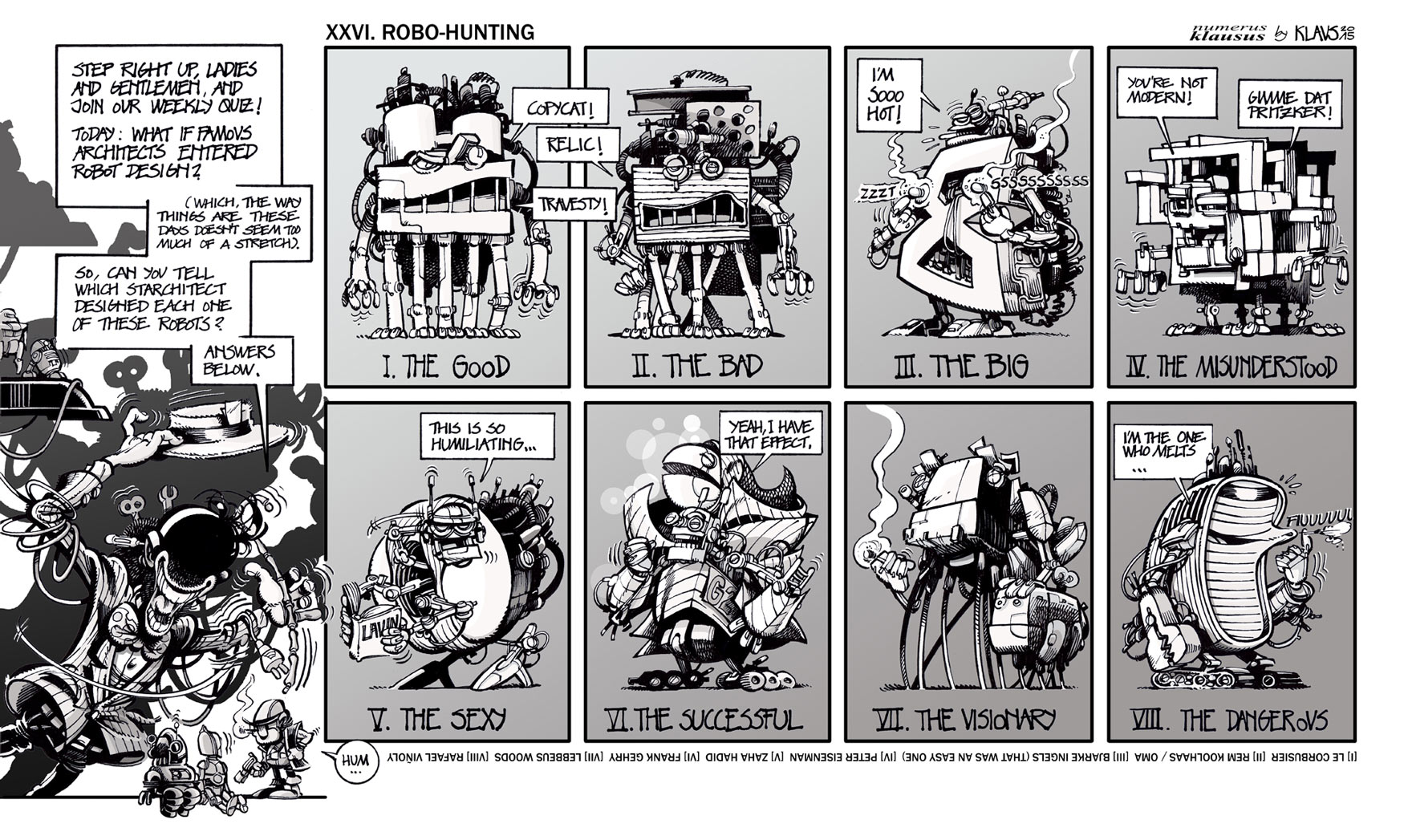

Klaustoon

XXVI. Robo-Hunting

-

page 59

Next

Zaha

-

-

uncube's editors are Sophie Lovell (Art Director, Editor-in-Chief), Florian Heilmeyer, Rob Wilson and Fiona Shipwright; editorial assistance: Sara Faezypour; graphic design: Lena Giovanazzi; graphic assistance: Diana Portela.

uncube is based in Berlin and is published by BauNetz, Germany’s most-read online portal covering architecture in a thoughtful way since 1996.

![]()

Welcome to the uncube robot issue!

uncube issue no. 36: Uncanny Valley takes a close look at current developments in robotics and intelligent systems with respect to architecture and beyond. From bionic limbs to stone cutting, construction sites to K9 pets, we talk to innovators and researchers about our relationship with robots and how they will serve us in the future – and about some of the implications that we may need to consider more carefully.

Packed with more film and animation than ever before, this issue takes some concentrated reading and viewing. But instead of handing over yet more effort to your smart support systems, take some time to absorb the message (and/or massage) our medium contains. If you are going to surrender your future rights to the machine then you should at least read the manual first!

uncube at your service.

Cover image: Spatial Wire Cutting project by Gramazio Kohler Research, ETH Zurich, 2015. (Photo: Gramazio Kohler Research, ETH Zurich)

-

![]()

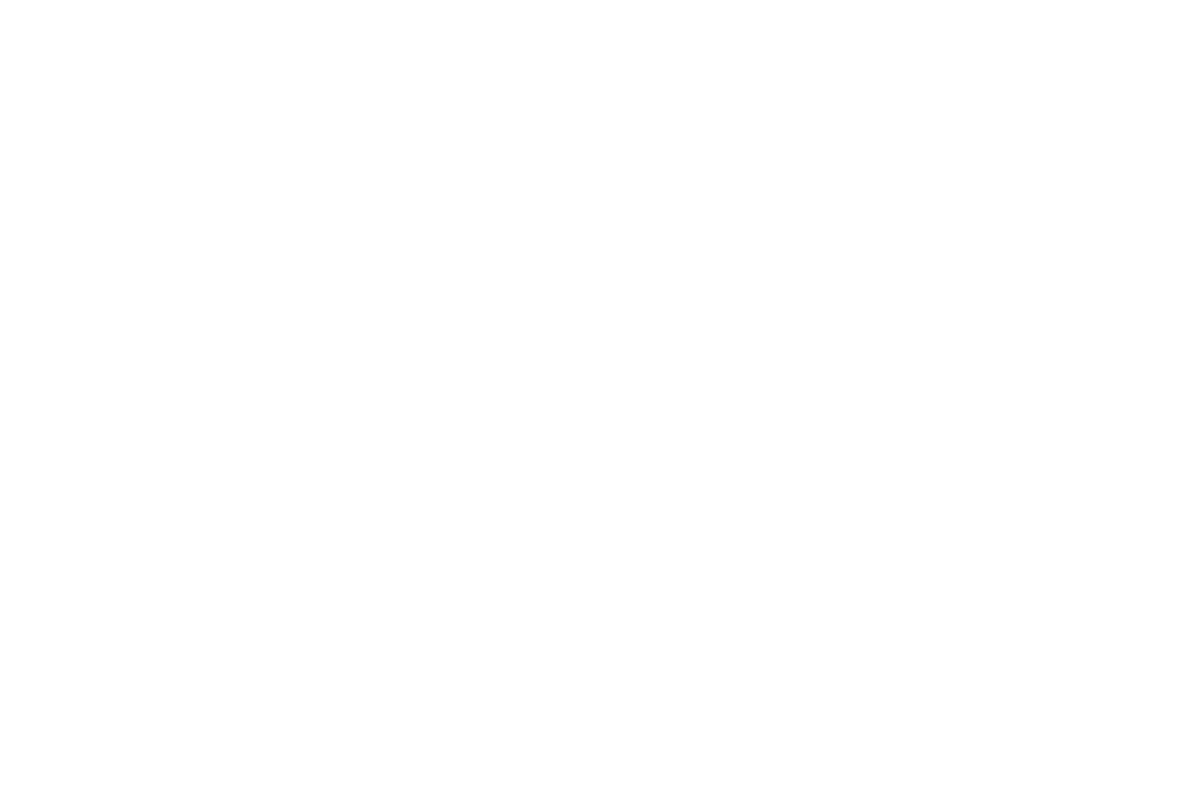

The term “uncanny valley” was coined by the Japanese engineer Masahiro Mori in 1970 to describe the sense of eerie unease that we the human viewers feel when confronted with an artificial machine created in our own image, one that comes very close, but not quite, to the real. It is the double-take point at which empathy turns to disquiet and then revulsion.While the vast majority of the machines we’re creating to serve us today are not created in our own or nature’s image – with android replicants still more a quirky cul-de-sac of futuristic fantasy – the machine is now hidden in another way, with our technological assistants likely to be intangible, remotely controlled bits of kit – take GPS guidance systems for example. Quite often now you ask yourself: where is the machine? And who is it serving?

![]()

-

Even when the machines we rely on appear comfortingly domestic and present – take our smart phones – we haven’t the faintest idea how they work, yet trust them with the most intimate details of our lives, knowing they are just interface points in vast networks designed to support, but also to track and monitor us.

This is the new uncanny valley: it is the feeling we have about the idea of being chauffeured around in a driverless car; in watching an industrial robot build something with more precision than the most skilled craftsman; in realising that we have developed an emotional attachment to a machine, given it a pet name and mourned its loss when it dies as if it were a friend. It is the fear of impending technological singularity; the growing zone of discomfort within the complex/systems of machines and technology into which our lives are becoming embedded, systems that are creating and building new environments – like smart cities – into which we fit: systems unknoweable by any one individual yet to which we are fast becoming so inured that we no longer perceive them to be artificial.

Both physical and invisible, the robots are here to stay. We made them to help us, not to make ourselves helpless. But a good tool is only useful if you make the effort to understand what it is and how to use it. We are the humans. Can we still trust ourselves to be in charge of our own futures?

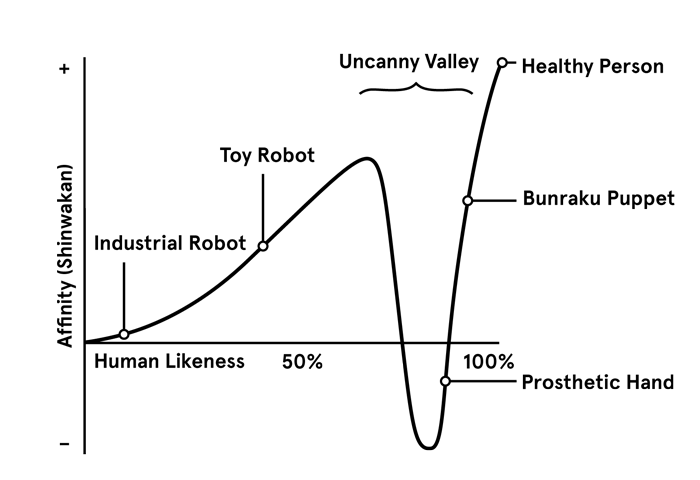

![]() (sl)

(sl)Jordan Wolfson’s “Female Figure”, 2014. (Stills taken from The Artist's Studio MOCAtv feature, courtesy MOCA, Los Angeles)

-

![]()

![]()

-

The Good Thing About Robots

Thymio is an educational robot used in schools in Switzerland and France to teach primary schoolchildren programming as well as a basic understanding of technology. It was developed together by EPFL and ECAL in Lausanne. In a recent workshop, its designers and Media and Interaction Design students at ECAL put it through its paces. I (sl)

(Image and film: Thymio meets ECAL)

-

![]()

Robots that were made to be loved

By Claire L. Evans

-

![]()

Do tech corporations have moral responsibilities towards customers who develop emotional relationships with their products? Or should they just be allowed to take on a life of their own? uncube’s science and culture correspondent Claire Evans reports on the commercially obsolete canimorphic AIBO robots whose owners are struggling to keep them “alive”.

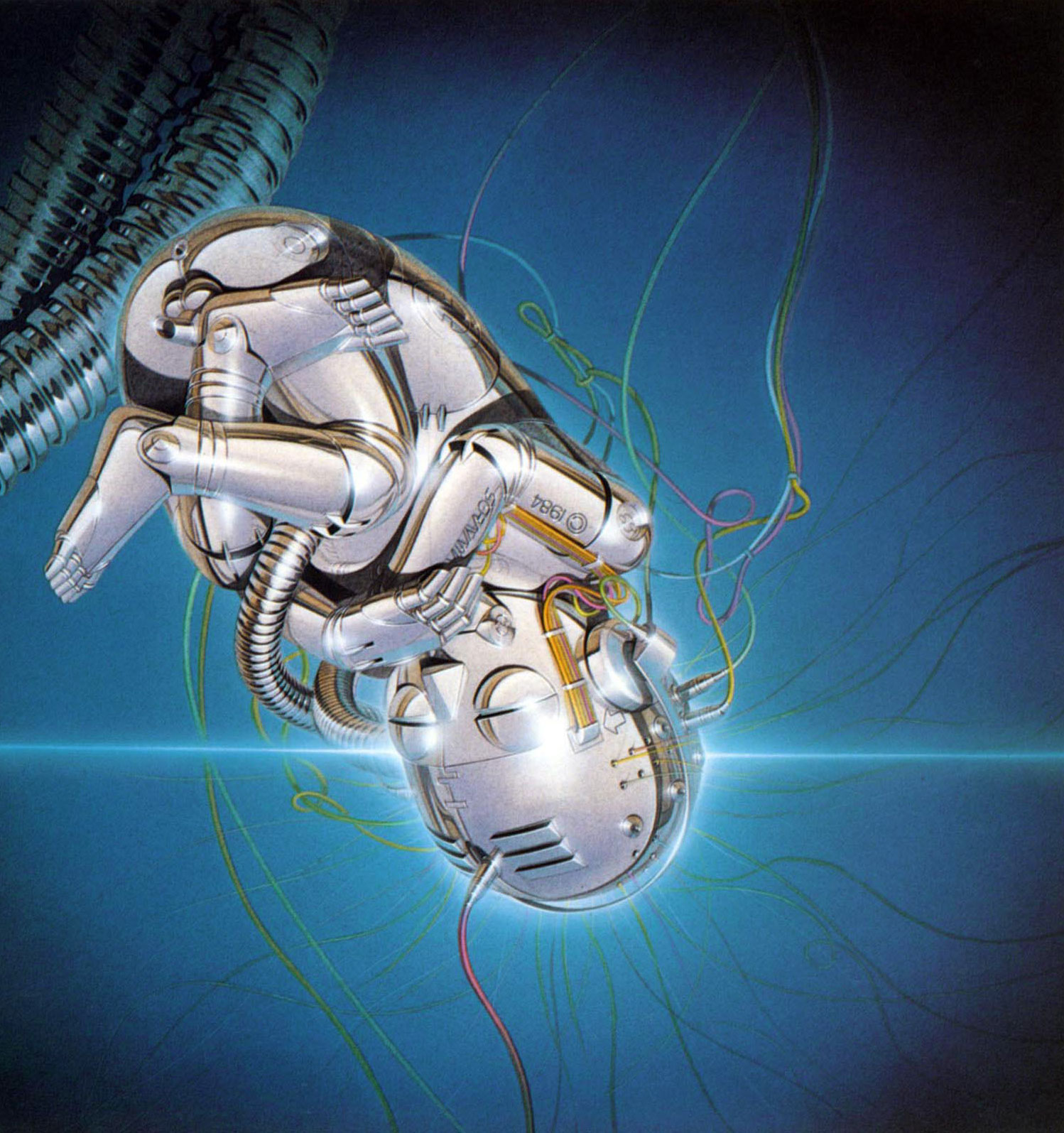

Previous page: Image © Sorayama/Artspace Company Y/ Uptight, 2015. This page: Aibo Entertainment Robot (ERS-110), Hajime Sorayama, Sony Corporation, company design. (Photo © the Museum of Modern Art)

-

Robots work. That’s what they do. From the first usage of the word – in the Polish writer Karel Capek’s play Rossum’s Universal Robots, in which “robots” are biological drones engineered to serve human masters – labour has been central to the robot’s identity. Both in the collective fantasy of science fiction and in the factories of the 21st century, robots work: building cars, assembling consumer electronics, lifting loads and even checking-in hotel guests.

That is, except for AIBO.

A robot pet designed and marketed for domestic use by the Sony Corporation, AIBO was never meant to do anything. Despite being the most sophisticated product ever offered in the consumer robot marketplace, it is not an appliance, it is not designed to bear any burden beyond the emotional attachment of its owner. Or as Takeshi Yazawa, vice president and general manager of Sony Entertainment Robot America, once explained: “AIBO loves you, you love AIBO, and that’s it”.

AIBO – which means “companion” in Japanese, but is also a quasi-acronym for Artificial Intelligence Robot – is a canimorphic robot. It wags its tail, cocks its head, and sits on its hindquarters when instructed, an eager puppy moulded in chrome-tinted plastic. But it’s more than a dog, of course. AIBO learns, expresses anthropomorphic emotional states, and communicates through auditory tone combinations and eye lamp patterns. To the inexpressible joy of thousands of AIBO owners, still maintaining their pets despite the fact that Sony discontinued the AIBO in 2005 and completely stopped repairing AIBOs in 2014, the robot dogs can even dance, sometimes synchronously in groups. Videos of this phenomenon are strangely moving: a chorus of willing companions, their metallic paws clicking on hardwood in precise concert.The original AIBO phenotype was designed by Hajime Sorayama, a Japanese artist best known for his erotic airbrush paintings of female androids, flat images rendering the curves of women in glassy, metallic lustre. His robot design, now in the permanent collections of the Smithsonian Institution and MoMA, is a fascinating instance of life imitating art: Sorayama made a career illustrating sensual gynoid women in the throes of pleasure, lounging, riding bicycles, handling consumer goods, completely untethered from labour and AIBO, the only robot he ever participated in building, only knows play.

»In Japan,

![]()

-

emotional attachment to robots is deeply ingrained.«

Later iterations of AIBO were designed by Shoji Kawamori, better known as an anime creator, and the manga writer and artist Katsura Hoshino. AIBO was exceptional for many reasons: although it functioned, it didn’t work and artists – not just engineers and industrial designers – were instrumental in every phase of its design.

The result was a robot that immediately became an emotional centre of gravity. Designed with an element of fantasy and a respect for the grace of a personal robot’s objecthood, AIBO may well have been the first consumer robot to be loved. It could never disappoint its masters because it had no job at which to fail.

In Japan, emotional attachment to robots is deeply ingrained. Shinto, Japan’s indigenous religion, is an animist faith, its adherents believe that non-human entities, such as animals, plants and robots possess a spiritual essence. AIBO is no exception. As a recent New York Times documentary piece profiled with great empathy, AIBO owners all over Japan grieve for their pets, which – now that Sony has ceased all repairs and customer support in a bid to improve profitability – are starting to break down irreparably.Image © Sorayama/Artspace Company Y/ Uptight, 2015

![]()

-

»What if all robotics development was rooted, like this, in deep attachment?«

Image © Sorayama/Artspace Company Y/ Uptight, 2015

-

Even if Sony still made new models, a lost AIBO could never be replaced since each dog’s personality is moulded by a life spent learning from its owner. Such is the attachment that develops, it is not uncommon for AIBO owners to hold funerals for dogs departed to the great charging cradle in the sky.

The AIBO mind is mutable. When Sony still manufactured them, between 1999 and 2005, it sold companion software called AIBOware to run on the synthetic pet. The Life AIBOware emulated the lifespan of a real animal, allowing owners to raise the robot from puppyhood to mature development. In later years, third-party developers began to create open-source software packages for AIBO, a culture Sony initially attempted to curtail, invoking copyright issues, before eventually releasing an SDK for non-commercial use. Using the high-level scripting language R-Code, programmers have created a litany of AIBO personalities, many of which are available for free on the Robot App Store. DogsLife, for example, expands the basic AIBO personality with hundreds of additional performances and “fun doggish whims” and Disco AIBO allows AIBOs to dance.

Active forums all over the web are testament to the culture of AIBO hacking, which remains small but robust; the dogs are malleable and pliant, their machine minds abandoned by their corporate makers, waiting only to be moulded further. Robotics students and hobbyist engineers have embraced AIBO as an inexpensive research and teaching tool. And even in non-technical circles, a sort of folk culture has sprung up around these machines; their owners clothe them, pose them, take them on holidays, and swap software personalities and advice online. Despite being “obsolete”, AIBOs are arguably more capable and more intelligent than ever before, simply by virtue of being loved.

It’s significant enough a phenomenon to warrant the question: what if all robotics development was rooted, like this, in deep attachment? What if, instead of developing intelligent machines for work – in industry, for war, or explicitly to displace human labourers – we developed intelligent machines out of love? Never mind what it can do. You love AIBO, and that’s it – until one day, AIBO, or something like it, loves you back. I -

Nothing Can Harm Them

Microrobotics is not quite this advanced yet – but this speculative scenario is still uncannily believable: will we really end up having to create synthetic substitutes to fill niches in the ecosystem of the species we destroy? In a film made by Greenpeace to highlight potential results of continued use of pesticides, “Newbees” could well be part of our future – if agribusiness doesn’t start taking more care of the bigger picture. I (sl)

![]()

Film © Greenpeace

-

The Eye of the Swarm

Superflux's Drone Aviary

As a design agency working at the intersection between emerging technology and everyday life, Superflux’s speculative scenarios are effective precisely because their implications can be easily connected to present-day experience. Their Drone Aviary project posits a world in which drones have free reign through civic space, bearing an uncanny closeness to our current reality not so much because of the technology’s capabilities but rather the insidious nature of its encroachment.

The term “drone” is set to become as ubiquitous in the language of consumer deliveries (Amazon) as it has been in military parlance (Afghanistan). Likewise, the idea of data-collecting devices that track individuals is no longer the Orwellian stuff of nightmares: we carry them every day. Drone Aviary’s strangely familiar imagery – onscreen ads that look something like a more banal version of Blade Runner, ever-present “share” buttons – underscores that this particular future is very much here (or at least circling just a few metres above). -

Superflux is an Anglo-Indian design practice based in London, but with roots and contacts in the Gujarati city of Ahmedabad. Working closely with clients and collaborators on projects that acknowledge the reality of our rapidly changing times, Superflux design with and for uncertainty, instead of resisting it and have a particular interest in the ways emerging technologies interface with the environment and everyday life.

Superflux.in

With parallels in Drone Aviary to Alfred Hitchock’s menacing Birds, Superflux, describe their interest in the “precipice moment” for this project – the point at which small groups of “birds” are in view but the swarm is yet to come – but in their scenario, the birds are very much man-made and multi-media. “When the network is digital and invisible it appears to be like magic and we remain unchallenged”, the designers ask, “but what happens when it starts becoming visible and gains physical form?”

In interrogating the architecture of networks – structures light in their physical manifestation but extremely “weighty” in terms of the authority they wield – such far-ranging transparency is ultimately revealed to be a form of opacity. For when the edge of the network is blurred, so too is the legal framework that might be used to regulate it. I (fs)![]()

Shot entirely from the aerial perspective, “Drone Aviary” uses five different drones to represent a strand of discourse concerning their use in civic space. (All images and film: Superflux)

-

Machines of

Loving GraceThe city as a distributed robot & the omnipresent intelligence of data networks

Text by Sam Jacob

Illustrations by Daniel Dociu -

Architect Sam Jacob takes us on a not-so-futuristic journey from the strategic datascape of the contemporary battlefield to built environments with embedded intelligence. And he finds the “machines” that control us to be disturbingly unaccountable.

If you were to conduct an archaeology of visions of the future, from the mounds of speculative dirt you’d dig up a vast array of artificial humanoid limbs and metallic heads. These are the synthetic body parts of a Maschinenmensch future that has yet to come to pass. But then science fiction is always about the present, so an archaeology of science fiction is a history of hopes and fears frozen in fictional form. The robots that inhabited these fictions were not technological entities but philosophical ones – images of ourselves rendered in ways that let us imagine what it might still mean to be human in worlds transformed by progress. Our cities remain free of these technological doppelgängers but that doesn’t mean these same paranoias and dreams are not part of our world.

If you want to see what the future of the city might be you only have to look at the recent battlefields of Afghanistan. Here was a networked field, a military internet of things, connecting people, objects and their flows through space, in a three dimensional matrix of technology. Above, flocks of satellites, planes, helicopters, drones and aerostats. On the ground, swarms of sensor-augmented vehicles, teched-up soldiers and a cohort of robot devices.

All of this controlled by an invisible tactical communications network that stretched from the local to the global. Biological units, equipment, their robotic menagerie and their remote directors cast an invisible net across the landscape. A net that surveyed the landscape, gathered data and intelligence, communicated it, processed it and in turn remote-commanded movement and logistics in a constant choreography of lethal force. -

Like the eyes of MQ-9 Reaper drones connected through electromagnetic optic nerves to their pilots back in Las Vegas, it was both there and not there. The technology of warfare as deployed in Afghanistan was an undoubted occupation of space but simultaneously distanced from that very same space. The rise of remote-ness – of drones and robots patrolling sky and ground – was a new level in the rendering of landscape as strategic datascape. All the better to monitor and maintain security with the greatest efficiency possible – at least in theory.

The battlefields of Afghanistan have been de facto experiments in new forms of networked spatial practice, the kind of space that is coming soon to a city near you.

The relationship between military practice and urbanism is nothing new of course. It has a long and noble history: from fortifications as military device to their re-emergence as suburban decoration; in the radical radial reconstruction of Paris by Baron Haussmann into easily surveyed boulevards; in the contemporary use of green-zone type defences around potential urban targets; even in the origins of the architectural axonometric projection as a means to three-dimensionally plot the trajectories of artillery shells. This history often describes a process where ideas tested in exceptional military conditions first are later imported into the normality of urban life. For us that means: first Helmand, then Hampstead.

The tactics and technologies of the contemporary military field serve as a (literal) avant-garde of the new urban concept of the smart city – a useful point of reference if we want to picture what it might mean because the idea of the smart city is as pervasive as it is hazy. What it suggests is an idea that merges technological promise with green futures, of automation and regulation of flow, all providing an increasingly frictionless form of urbanism. Devices, machines, buildings, infrastructure in constant information-jabber aimed at optimised efficiency.

It is a dream of a built environment with embedded intelligence, of huge amounts of live data streams processed by algorithms that in turn feedback into the physical choreography of the city. The smart city, it’s suggested, will transform government services, transport and traffic management, energy, health care, water and waste – pretty much everything. It addresses gigantic issues of climate change, economic restructuring, ageing populations, pressures on public finances and the very idea of urban democracy. In other words, the smart city promises (and threatens) to transform our entire urban milieu. The social, physical, political, economic and environmental realms of the city will be (is) reframed by the “intelligence” generated by massive data harvesting and processing.

This smart-dream-future of the city might only now be beginning to happen but it has a long history – one that originates in a very different place. -

In 1967, Richard Brautigan, poet in residence at California Institute of Technology, published All Watched Over By Machines of Loving Grace. The poem described a Haight-Ashbury techno fantasy. Here is an extract:

I like to think

(it has to be!)

of a cybernetic ecology

where we are free of our labors

and joined back to nature,

returned to our mammal

brothers and sisters,

and all watched over

by machines of loving grace. -

The text was printed over an image of electric schematics and it set out a utopian vision of a techno-pastoralism, where new digital machines could return us to a prelapsarian state, at one with nature in an electric Eden.

Within architectural culture, a similar idea was expressed in Archigram/David Greene’s Bottery (1969) conceived as “a fully-serviced natural landscape”, an invisible built environment that would let you “do your own thing” manned by patrolling bots providing services and nodes disguised as landscape features in the form of Log and Rock Plugs dispensing data and power. Just as with Brautigan, technology is figured as a liberating force whose sophistication would allow us to return to an innocent “natural” state.

Greene describes a “bot” as a “Machine Transient In the Landscape” and the contents of the Bottery as:

1. (An architecture of) Time

2. Planet Earth Spacegarden

3. Some existing hardware

4. Some new hardware

5. The idea of the invisible

He writes that the “Bottery is part of the idea of the Spacepark Earth” and for further details “write to Sierra Club Foundation, Mill Tower, San Francisco, California”.

These kind of late 60’s zippy ideas were – and remain – central to the West Coast techno-utopian dream. Think of Apple Inc., now the most valuable company in the world but named after the orchards of the All-One Farm commune set up by a charismatic, free loving, LSD propagandist. Think of the gigantic techno-Xanadus currently under construction. Of Apple’s perfect sleek Jung circle (complete with its own apple orchard), designed by Foster; of Amazon’s Bucky-bio-sphere-redux; of Google’s BIGwick mega-bottery replete with robot-construction drones.

These are places that are far more than office complexes. They are nothing less than 21st century versions of the kinds of ideal communities, model villages, and utopian settlements that were the foundation of modern urban planning. Modern day Port Sunlights and Bournvilles, high tech versions of Robert Owen’s New Harmony. These historical examples are significant not just as worker towns but places founded with profound reformist and idealistic principles. They demanded certain forms of behaviour and outlawed others, they figured their communities around micro-models of how the world could /should be. -

![]()

![]()

![]()

Inserted GIF: New Apple HQ designs, Foster + Partners. (Image Courtesy Apple Inc.)

-

![]()

![]()

![]()

Google Self-driving Car; Google HQ renderings. (images courtesy BIG)

That’s how we should view these new tech-campus-worlds too, as contemporary model villages. As places that embody (and enforce) entire world views, as complete models of new forms of society organised around particular ideas of technology. And like their Victorian counterparts they promote a particular idea of society. Bundled into code and chips is an unstated ideological idea of how the world should be organised, and who should be organising it as well.

But, unlike their historical precedents, these new corporate models of society are not limited to the boundaries of their own real estate. With the global reach these corporations have – at scales beyond that of the city and the state – and with the depth of penetration into the minutiae of our lives, their ideas about the organisation and function of society are now all-pervasive. They are the backbone of the global model of the smart city. Everywhere is – or soon will be – California in all but name.

Away from the giants of consumer-facing technology are those involved in the (big) business of “smartifying” our cities (Arup estimate the global market for smart urban services by 2020 will be $400 billion annually). Companies like Cisco and Siemens are deeply involved in implementing smart initiatives in cities across the world. Meanwhile the likes of Uber and Airbnb are transforming specific models of urban organisation so fast and furiously that we can only expect more traditional urban models to be fundamentally reinvented.

But as this is unfolding so too is a crisis in the idea of the city as a democratic space. The smart city is, mostly, an outsourced city. -

Vote for whoever you like, they say, you will still get Serco – one of the giants of municipal service provision. Their tag line promises to “improve services by managing people, processes, technology and assets more effectively”. Say you are a city – or a state for that matter – Serco can help you by “providing safe transport, finding sustainable jobs for the long-term unemployed, helping patients recover more quickly, improving the local environment, rehabilitating offenders, protecting borders and supporting the armed forces” …amongst a host of other things traditionally delivered by the state and municipality itself.

Is Serco’s promise any different from those “machines of loving grace” that Brautigan imagined? Is it yet another of those 1960s dreams of freedom returned to us through deregulated neoliberalism? Are we watched over with loving grace but also by machines with suspicion in their eyes? -

Sam Jacob is principal of Sam Jacob Studio for architecture and design. His work ranges from master planning and urban design through architecture, design and art projects.

Previously, Jacob was a founding director of FAT Architecture where he was involved in many internationally acclaimed projects including the BBC Drama Production Village in Cardiff, the Heerlijkheid Hoogvliet Park and Cultural Centre in Rotterdam and the curation of the British Pavilion at the 2014 Venice Biennale. He has exhibited at leading galleries and museums including the Victoria & Albert Museum in London, the MAK in Vienna and the Biennale in Venice.

Jacob is Professor of Architecture at the University of Illinois at Chicago; Visiting Professor at Yale School of Architecture and Director of Night School at the Architectural Association. He is also contributing editor for Icon magazine and columnist for both Art Review and Dezeen.

samjacob.com

Daniel Dociu is a sci-fi and fantasy artist who was raised in Cluj, the capital of Transylvania in Romania, where he also studied art and architecture at the Academy of Fine Arts. He moved to the US in 1990 and has lived in the Seattle area for over 20 years.

After working as a toy designer, Dociu switched to interactive entertainment. For the past nine years, he has been with ArenaNet, in Chief Art Director role NCsoft, overseeing visual development for all of the publisher's North American projects, with particular focus on the critically acclaimed Guild Wars franchise. He is also a prolific freelance artist, contributing to numerous publications, advertising and film.

danieldociu.weebly.com

The dislocation of the provision of urban services, whether officially privatised or not, from the city itself to global multinationals such as Uber, Google, Apple, Cisco or Serco, dislocates us as citizens from the inherent politics of those services. They are here but not here, as locally unaccountable as Vegas-based drone pilots.

Physically, the future city is a place where everything is itself and, variously equipped with sensors, computing power and autonomous mobility, also something else. If cars are reinvented as driverless devices they become things that move us and things that regulate that movement at one and the same time. Street furniture is recast as both comfort and control. In other words, Greene’s Log Plug acts as a precursor to the transformation of the entire city fabric into both thing and node. In other words, the bot is everything and everywhere. The city itself is a distributed robot, a collection of sensors and functions linked through invisible networks of communication.

What does that make us as the citizens of these new smart cities? Are we condemned to be prisoners in an all-seeing digital panopticon of street benches? Are we suspect-citizens leaving data-trails across the urban landscape? Or are we liberated to become friction-free smart citizens in a new form of ultra-fluid urbanism doing our own thing?

Most likely both. As smart citizens we buy into the future of the city by equipping ourselves with phones, apps, RFID chips, driverless cars, networked homes and whatever else we can afford. Why? Because the attraction of the smart city is so great, because it is so convenient and because it does make us feel free. We are, in other words and to coin a phrase, voluntary prisoners of smart architecture. p -

The world just got littler...

The world just got littler...

To hold one in your hand is to hold a tiny power pack, but its energy comes from the greatest source of energy we know. The sun charges it and it charges your life at the same time.

Maybe you’re a Zimbabwean child that needs a light to study by at night. Or you might be headed to the Roskilde Festival and you need a light for your tent. Maybe you’re going hiking with your friends and you want something better than a battery-powered torch. Or you work in a Himalayan village and you need a hand-held light to guide your way back home through the mountains. You could be a design-nerd and you want to own a little light designed by Olafur Eliasson.

Or you’re a Sudanese mother and you want to prepare your family’s meals under a safe and healthy source of light. Or maybe you have a young family in Berlin and you want a colourful way to teach them about sustainability.

Whoever we are, wherever we go, there is more that connects us than divides us. And one of the most important things that holds us together is that we all need light in our lives. Of course the greatest light we have is the one we all share: the sun.

Sometimes it’s nice to feel that the big wide world just got a bit littler.

Welcome to our little world:

www.littlesun.com

![]()

![]()

-

In our own image

In 1942, the science fiction author Isaac Asimov published his famous Three Laws of Robotics: 1. A robot may not injure humanity or, by inaction, allow humanity to come to harm. 2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. But by 1951, with Cold War tempers running hot, the film The Day the Earth Stood Still took a more straightforward moral standpoint. It features a humanoid alien Klaatu who comes to save the planet’s citizens from themselves, but ends up getting shot for his pains. His robot Gort saves him and they jet off again, but not before delivering the ultimate good cop/bad cop deterrent: embrace peace or we’ll incinerate you. I (sl)

Photo courtesy Stiftung Deutsche Kinemathek

-

The Bionic Man

Hugh Herr, head of Biomechatronics at MIT

Hugh Herr at Ted2014. (Photo: Ryan Lash, Flickr/Ted Conference, CC BY-NC 2.0)

-

Hugh Herr is the director and personal investigator of the Biomechatronics research group at the MIT Media Laboratory. He is also the founder BiOM, a prosthetic company, transitioning prosthetics into Personal Bionics.

TED Talks

Watching the biophysicist and engineer Hugh Herr walking, running and jumping on the stage during a TedTalk presentation with his bionic legs laid bare for all to see is as about as uncanny as it can get. He is a human with mechanical augmentations that potentially render him superhuman, yet he moves with the natural ease and grace of any “able-bodied” mortal.

Hugh Herr is a bilateral amputee who has contributed greatly to the shift in perception from disability to advantage – through technology. He lost both of his legs below the knee to frostbite whilst mountain climbing at the age of eighteen in 1982. Prosthetics back then were crude, so Herr, a passionate climber, decided to make some improvements to allow him to get back on the mountain.

Herr not only custom-designed his own legs, but went on to master the field of mechanical engineering and complete a Ph.D. in biophysics. He is now head of the Biomechatronics Group at the MIT Media Lab, designing bionic prosthetics such as the BiOM T2 system, which responds to neural command, and which he uses himself for walking. Additionally, his group is also developing the technologies of human augmentation (both for “disabled” and “enabled”) in the form of bionic exoskeletons. The underlying goal of the group is to explore technological interfaces that will ultimately merge our built world with nature.

The future that Hugh Herr and his team are envisaging is one of extreme bionics, a future where not only disability is eliminated, but where humans will be able to enhance their brains and bodies; a future where the narratives of disability are reversed; a future where cyborgs are commonplace and human hybrids the order of the day; a future that is already here.![]() (sf)

(sf) Photo: Andrew Kornylak / Aurora Photos

-

![]()

A conversation about losing control over our own technologyBy Crystal Bennes

Images by Tobias Revell

-

The less we understand about our personal technology, the easier it is to sign away our rights and, ultimately, control. We are delegating our responsibilities to algorithms and at the same time accepting what they give us in return as “magic”, up to the point where it becomes uncanny. The designer Tobias Revell and researcher Natalie Kane get critical about complacency with our Helsinki correspondent Crystal Bennes.

![]()

-

“Your average piece-of-shit Windows desktop is so complex that no one person on Earth really knows what all of it is doing, or how,” wrote journalist Quinn Norton in 2014. Coupled with Apple’s well-known slogan – “it just works” – these two expressions rest at the heart of conversations on magic, myth and technology which have long taken place within the design and technology industries, and are now emerging onto a wider stage.

In early 2015 the designer Tobias Revell and researcher Natalie Kane curated a strand of the Future Everything conference in Manchester, England, questioning the use of metaphors of magic and haunting in design and technology. “Magic was being used as an analogy to describe, for example, the way algorithmic systems work”, Kane says during a three-way video conversation. “Why do we keep thinking that Amazon recommendation systems are magic? There’s a lot to do with narratives of power, intent and agency – the conference was a starting point for a wider conversation over where we lose control over our own technology.”

Much of the technology industry remains visibly wedded to the Marquis de Condorcet’s idea of the “perfectibility of man”, as expressed in his 1794 book Sketch for a Historical Picture of the progress of the Human Mind with the argument that continuous progress in the past must lead to indefinite progress in the future. In part, it’s the devotion to this myth of progress that has made it so easy for magic to find a comfortable home in technology. “We’re seeing new types of origin myths and future myths around things like survivalism and seasteading,” says Revell. “All of these systems of belief have a myth about using technology to surpass ourselves into the next plane of humanity.![]()

-

![]()

-

They’re all invested in a certain magical technology. For example, the ability to put a bunch of rich libertarians on a boat in the middle of the sea does not suddenly give you the magical ability to avoid tax law. Or not to have service staff and maintenance crew. But the image of the boat at sea has a magical romanticism to these people, which then builds into a future myth. And that myth attracts investment.”

With regard to personal technology, individuals are beginning to understand that the magic invoked in slogans like “you are more powerful than you think” (Apple again) is not merely magic that makes life easier, but represents a sleight-of-hand to disguise the fact that the company holds all the power.“[Hacker, writer and artist] Eleanor Saitta outlines this idea of the ‘mage’s circle’, where the knowledge of how these systems work is kept within a small group of people,” Revell says. “You’re not allowed to know how to fix your iMac, for example. You’re not even allowed to know how to open it. I’d put ‘terms and conditions’ in the same category – you’re signing away your legal rights for the future and you don’t even know what it means.”

»you choose to use the technology in exchange for not understanding 100 percent how it works«

Kane chimes in: “Those are your terms of access. It’s a trade off; you choose to use the technology in exchange for not understanding 100 percent how it works. It isn’t always mean and malicious, as often they think they’re just making it simpler for you.”

Herein lies one of the most pressing problems in the broader conversation relating to the ease of so-called magical technology: responsibility. If our “terms of access” have now become such that we effectively sign away our rights to data, software and hardware ownership, as well as accessibility, is the magic still worth it? “It’s difficult in many cases”, Kane says, “because when something goes wrong our impulse is to look for a central responsibility, but the layers of accountability are distributed widely. To keep to the magical metaphor, should we blame the person who casts the spell or the culture of magic?”

-

Tobias Revell holds a BA in Design for Interaction and Moving Image from the London College of Communication and an MA in Design Interactions at the Royal College of Art from which he graduated in July 2012. As well as being an internationally exhibiting artist, he's an associate designer at Superflux, tutor in Design for Interaction and Moving Image at LCC, visiting tutor with MA Design Interactions at the Royal College of Art and a researcher for ARUP's Foresight and Innovation in London.

He is a founding member of Strange Telemetry, a technology and social policy research studio.

tobiasrevell.com

Natalie Kane works as a writer, researcher, and curator working at the intersection of culture, technology, design and futures. She currently works at FutureEverthing a lab for digital culture and innovation based in Manchester, UK and also holds a research position at futures research lab Changeist. She has a particular interest in the uses and abuses of personal data, ethics and innovation in wearable technologies and issues surrounding the Quantified Self, as well as an interest in the cultural and social impact of engineering.

ndkane.com

changeist.com

futureeverything.org

![]()

“For me, it’s the responsibility of the person who invokes the spell in the first place”, Revell adds. “My problem with something like Nest [a programmable thermostat and energy meter which can be accessed remotely - the company was bought by Google for $3.2 billion in 2014] is that you the consumer are making a decision not to be responsible for your impact on the climate. You’ve delegated that responsibility to a machine or an algorithm, which has been created by people you’ve never met but you assume share similar values. But it is your problem. You should think about it. You’re the human.”

Within the framework of the uncanny valley, products like Nest and other Internet of Things [a phrase coined by British tech entrepreneur Kevin Ashton in 1999 to refer to the new networks being created by the linking of physical objects to the internet] objects can offer a potentially fruitful analysis of the domestic space. “Everyone thinks of the uncanny as the robot that looks too much like a human”, Kane says, “but what if the uncanny valley is a home that pretends to be a home, but is actually much more terrifying than that. If your fridge is ‘magic’, do you not have the illusion of a home? Or more an anti-home?”

“It’s linked to the idea of haunting”, Revell says. “We’re installing devices in our homes which aren’t entirely under our control and we don’t understand how they work or how to fix them. That becomes deeply uncanny, because you’re then deeply suspicious of your own home. The security of understanding it as a home is swept away from under you. Looking at places like London, we now know our generation will forever be renters and things like Nest then have completely different implications. We’re already treating our homes as transient, small spaces. Once those are laced with devices and absent landlords, I imagine home will be a vastly different concept.”![]()

-

Cyber Sculptors

Kram/Weisshaar's Robochop project

-

KRAM/WEISSHAAR are digital designers of spaces, products and media. The company was founded by Reed Kram and Clemens Weisshaar in Munich and Stockholm in 2002. The office now employs designers, architects and engineers from Germany, Spain, Sweden, the UK, the US and Japan.

Clemens Weisshaar (*1977, Munich), apprenticed as a metal worker, before studying product design at Central Saint Martins College of Art and Design and the Royal College of Art in London. He was an assistant to Konstantin Grcic for three years before founding his first design office in 2000. He lives in Munich.

Reed Kram (*1971, Columbus, Ohio) holds a Bachelor of Science from Duke University and Masters of Arts and Sciences from the MIT School of Architecture and Planning, where he was a founding member of the Aesthetics and Computation Group at the Media Laboratory with John Maeda. In 1998 Kram founded his first office for design in 1999. He lives in Stockholm.

This year at the CeBIT trade fair in Hanover – a major annual tech industry shindig – designers Reed Kram and Clemens Weisshaar of Kram/Weisshaar design studio reacted to the fair’s theme The Internet of Things, by creating Robochop for CODE_n: both as publicity stunt and powerful demonstration of the possibilities unleashed once advanced robotics and 3D-modelling software are hitched to the internet.

Essentially it involved four very large industrial robots let loose on 2,000 40x40x40 centimetre polystyrene cubes. The robots cut and shaped each cube into a unique design created by a member of the public – stools and tables, geometric polyhedrons and swoopy random forms – programmed using a 3D web app, accessible to all.

The robots could be watched live at the fair going about their business in this process of remote subtractive manufacturing – but with a hint of creative magic: like mechanical Michelangelos sculpting each block to reveal the hidden form within.

But this was no slow, delicate chipping away, more lambs to the slaughter: each block grasped by embedded pins and manipulated with ruthless efficiency over a hot wire cutting tool, cooled by cold, compressed air.

Each end result was later shipped to its creator – although why anyone would want a badly designed polystyrene chair is another matter – but the bigger outcome is a future where anyone can remote-access and programme heavy industrial manufacturing to design and produce for them in fab labs anywhere in the world.![]() (rgw)

(rgw) Photo: © Matthias Ziegler, courtesy KRAM/WEISSHAAR

-

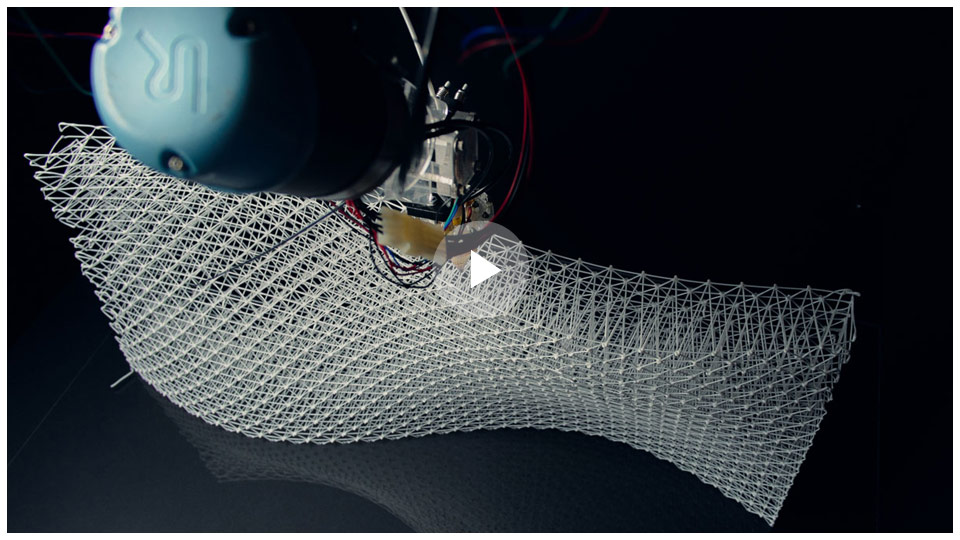

The Otherness

of the MachineJan Willmann, Senior Research Assistant at the Chair of Architecture and Digital Fabrication at ETH Zurich, explains the current status of robotics in architecture and pinpoints the most promising paths for the future of constructive systems.

Interview by Fiona Shipwright

-

Dr Willmann, you trained as an architect but now primarily work on mapping the connections between architectural theory and computer aided design. Can you explain a little about how these interests converged and how your interest in robots in architecture arose?

In my opinion, today’s very specific implementation of robotic fabrication processes – in comparison to the lack of material substance in the early days of architecture’s digitalisation during the 1990s – is practically forcing architecture’s arrival in the digital age. Particularly from a theoretical/historical perspective this is very interesting since we are no longer witnessing the delayed modernisation of the discipline, but rather the advent of a uniform technological basis for architecture, which since the onset of building industrialisation in the early 20th century has remained more vision than reality. Clearly, this has a number of substantial implications; for instance, with this shift in the production conditions, the Albertian division which has determined architectural practice for the past 500 years, between intellectual work and manual production – between design and realisation – is now being rendered obsolete.

At the same time, in this “Second Digital Age” a wide range of inherently architectural topics are finding their way back onto the agenda, such as, for example, questions of standardisation and authorship. So, what still remains vague are the ties to architectural history, the theoretical implications and future prospects of all this. I always felt that explorations into our current digital age must therefore broaden to include both practical and theoretical perspectives. This requires that robotic technology be regarded not only as a medium of production, but also as an epistemological approach and cultural interface.To me the question is whether digital technologies can impact and even change architectural thinking, and to critically explore what happens if architecture absorbs this new connection between computational logics and material realisation. Only then will it be possible to stimulate relevant and rigorous exploration, discussion, principles, and realities, and ultimately to open up new ways to understand and perceive architecture in the digital age.

What about the specific integration of tangible robotic and mechanised hardware into the building process, as against less-tangible design or system-control software. What is the current status with robots in architecture? Where and how are they being implemented and what kinds of robots?

That’s a very important question, since a very wide range of robotic technology is currently used in architecture, such as robotic systems for adaptive façades, or aerial drones for spatial printing processes.

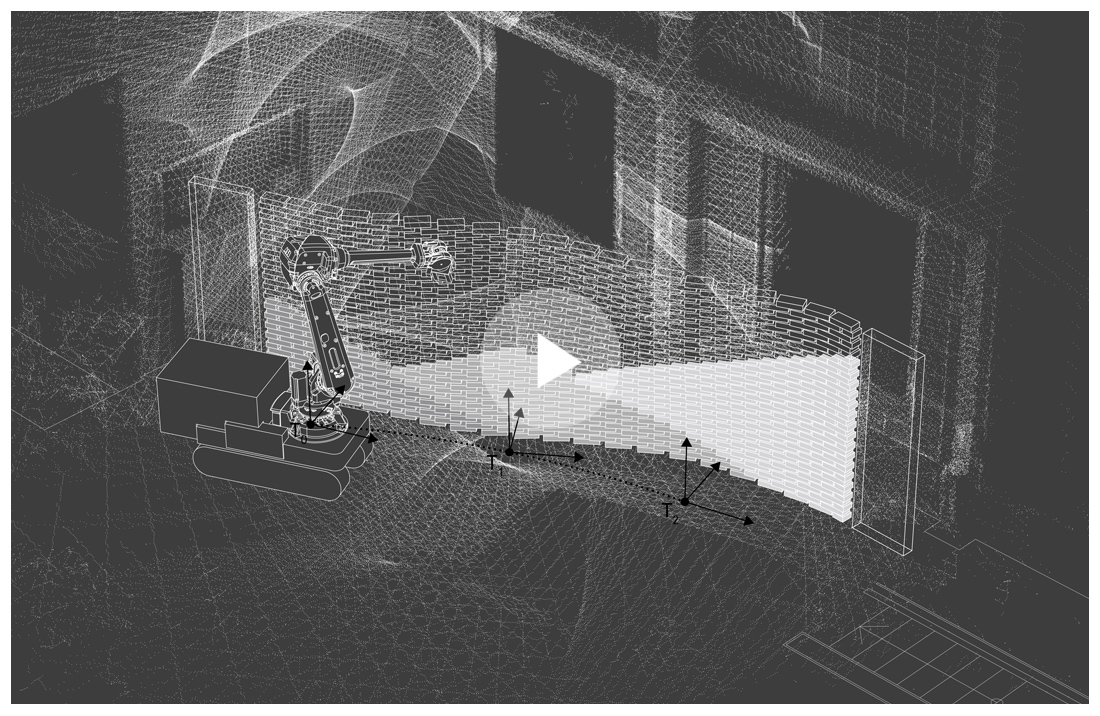

Previous page: Spatial Wire Cutting project, producing geometrically complex architectural elements using hot wire cutting. Gramazio Kohler Research, ETH Zurich, 2015. (All images: © Gramazio Kohler Research, ETH Zurich unless otherwise stated)

-

Remote Material Deposition, in which an industrial robot throws material (in this case wet clay) over distance in an attempt to move beyond the creation of static forms. Gramazio Kohler Research, ETH Zurich, 2014.

-

Overall, I think this shift towards a new interpretation of the automatic is enthralling. The current status of robots in architecture can be seen in manifold research explorations, with a seeming new “intimacy” between the designer and his/her tools. That said, one should not forget that this whole development started only 10 years ago. What we are currently missing – despite all these exciting projects – are concrete industrial and large-scale applications in the construction sector, as well as a more elaborated vision of the overall cultural impact this might have.

Apart from this is the importance of specific experiments – since robotic research in architecture is not a good topic to speculate about. So, a very reasonable – and pragmatic – approach is the use of industrial robots, as pursued at ETH Zurich. This is because of the robot’s ability to perform an unlimited variety of non-repetitive assembly tasks at full/architectural scale and also because six-axis robotic arms are conventional off-the-shelf products, they are cost-efficient and fairly robust and flexible. The designer does not therefore need to concentrate on the technological development of a robot, but can concentrate on the design of the robot’s toolhead and, most importantly, on robot-induced designs and construction processes.What is the current global state of robotic research in architecture?

There are currently over 35 architectural research and teaching institutions using industrial robots for their explorations. Following ETH Zurich, where the first robotic laboratory for architectural non-standard assembly processes was installed in 2005, a number of promising architectural case studies and prototypical structures have been realised worldwide, elevating bespoke automated manufacturing to the role of a constitutive design and construction tool. The range of materials used is quite broad, for instance, robotic brickwork, robotic timber construction, robotic concrete manipulation, robotic 3D printing and carbon weaving, robotic wire cutting, etc.

Besides this wide range of material experimentation, and upcoming trends, such as structurally-driven robotic fabrication or research into particularly material-efficient digital fabrication processes, another major direction of this whole research is “upscaling”; this includes not only physical upscaling to 1:1 building processes, but also a functional (e.g. multi-material systems and multi-functional construction components) and disciplinary upscaling (e.g. architects working directly together with structural designers, material scientists, robotic and electrical engineers). This seems not only to be the next logical step but also one of the most promising ones in terms of more refined, holistic and applicable design solutions and constructive systems. -

First we had the singular “robotic arm”, now we have tangible examples of structures built by swarms of flying drones – what are the implications for architecture and engineering?

As well as “upscaling” there is also the expansion of these technologies. Key to this are multi-robotic processes, such as two to three industrial robots collaborating during assembly or a fleet of drones building up a structure in airspace. The technical and material implications are manifold, since these processes are not only much faster than conventional single-robotic assemblies, but enable a complete new landscape of complexity. For instance, imagine a geometrically-complex truss structure, which is assembled from simple generic elements. Here, one robotic arm is holding an element in space, whilst the second one brings in another and a third connects the two. Such complex spatial assemblies would not be possible with only one robotic arm.

![]()

Mesh-Mould, Gramazio Kohler Research, ETH Zurich, 2013.

-

Overall, the conceptual possibilities are almost unlimited. However, from a technological perspective this is still very demanding, since there are no enhanced control systems/routines for these custom cooperative construction processes yet.

From a conceptual point of view, multi-robotic processes are particularly interesting since they allow not just the realisation of more complex and physically larger design propositions but also force designers to think in truly three-dimensional logistical space in which they have to orchestrate a range of different (co-)operations, just like on a construction site. It’s the whole organisation that counts.What about the move towards robots that are “situationally aware” – that is, adaptable to changing surroundings and conditions?

The range of robotic processes is gradually expanding, particularly towards the direct use of robots on the construction site. On one hand, for instance, the small size of robots is a limitation; on the other, it holds a significant potential.

Their payload limitation does not allow for the handling of materials in the sizes and weights they are commonly used in construction. However, their small dimensions and low mass do make them suitable for application directly within the building site. Mounted on a mobile platform and equipped with sensors, for example, multiple robots can navigate the site, react dynamically to tolerances and changing conditions of such an uncertain environment and fabricate architectural structures directly in situ. As a consequence, the application of such situationally aware mobile robotic systems allows for the continuous production and erection of large-format or even complex construction systems over the course of an open-ended, on-site building process.![]() The Sequential Roof Production Facility ERNE AG Holzbau, Gramazio Kohler Research, ETH Zurich, 2015.

The Sequential Roof Production Facility ERNE AG Holzbau, Gramazio Kohler Research, ETH Zurich, 2015. -

A critical necessity for this is the ability of the robot to orient and position itself autonomously. A closed-loop system and corresponding sensor-driven cognitive skills, using 3D-cameras and laser scanners and the like, ensure the direct integration of incoming information into the logic and articulation of the building components as well as real-time input that enhances the interlinking of digital data and the concrete material.

Overall, this closed “digital chain”, from the computational design to its realisation directly on the construction site, seems to be indeed one of the most promising avenues for future robotic construction, leading to smart, mobile and versatile construction robots, which are able to co-operate and navigate directly on the construction site and which are able to adapt their construction operations.Building Strategies for On-site Robotic Construction, Gramazio Kohler Research and Agile & Dextrous Robotics Lab, NCCR Digital Fabrication, ETH Zurich, 2015.

-

Jan Willmann is Senior Research Assistant at the Chair of Architecture and Digital Fabrication at ETH Zurich. He studied architecture in Liechtenstein, Oxford and Innsbruck where he received his PhD degree in 2010. He was previously a research assistant and lecturer at the Chair of Architectural Theory of Professor Ir. Bart Lootsma and gained professional experience in numerous architectural offices. His research focuses on digital architecture and its theoretical implications as a composed computational and material score. He has lectured and exhibited internationally, and published extensively, including The Robotic Touch: How Robots Change Architecture (Park Books, 2014) together with Fabio Gramazio and Matthias Kohler.

gramaziokohler.arch.ethz.ch

Where does, or will, AI come into the equation? Do we need construction robots to have intelligence and decision-making capabilities? Why and in what way?

This is a critical question because in this context the terminology “intelligence” could be misleading. According to the computational scientist Rolf Pfeifer, an intelligent robotic system has much to do with its general (mechanic/systemic) morphology and the context it is used in. A very simple and “low-tech” robotic system can act very intelligently if it is well constructed for the purpose it is used for.

On the other hand, quite frankly, the robot itself has no intelligence at all. It is a pretty stupid machine, unless you equip it with enough sensors and control devices and programme it to react in a certain way in certain scenarios and then the machine can only follow instructions that a human has programmed. So, no magic, unfortunately. Sure, machine learning algorithms and dynamic control are important topics in robotic in general, but in the field of digital fabrication this might take another decade to be operative.

Therefore, I would put this AI-discourse in a different way and introduce instead the concept of “otherness”, suggested by Antoine Picon. Today, everything in architectural robotics is happening as if robots are going to forever remain machines for execution. But if you overcome this strange shortsightedness and consider their true machinic potential at the very early stages of architectural conception, it could well represent the next step in exploring the use of robots in architecture. Why not imagine a unified design and fabrication process, in which this “otherness” of the machine corresponds across to the designers as well as the workers in the process? p![]()

Flight Assembled Architecture, Gramazio & Kohler and Raffaello D'Andrea in cooperation with ETH Zurich, FRAC Centre Orléans, 2011/12. (Photo: © François Lauginie)

![]()

In Situ Robotic Fabrication/The Fragile Wall, Gramazio Kohler Research, ETH Zurich, 2012.

-

Tug Bugs

Could swarms of microbot workers become game-changers in the future of building? The Biomimetics and Dextrous Manipulation Lab at Stanford University have developed a 12g MicroTug robot which uses controllable adhesive – similar to ants – to pull two thousand times its own weight. “This is the equivalent of a human adult dragging a blue whale around on land. I (sl)

Image: stanford.edu

-

Matter Design is an interdisciplinary design practice founded in 2008 by Brandon Clifford and Wes McGee, centred on the continual interrogation of the reciprocity between drawing and making. Their shared interests in design coupled with proficiency in the means and methods of production have led Clifford and McGee to collaborate on a range of experimental projects which break conventional disciplinary notions of scale. Matter Design is dedicated to re-engaging the discipline of architecture with the nuances of matter in the digital era.

Matter Design is also a research practice that has been actively engaged in industrial robotics from its inception; robotic tools are an ideal platform for interrogating the translation from design to production.

Matter Design’s work has been exhibited worldwide and received numerous awards. In addition to the continual collaboration of the practice, the principals are currently based at the University of Michigan (Wes McGee) and the Massachusetts Institute of Technology (Brandon Clifford).

matterdesignstudio.com

-

![]()

Microtherme, Brandon Clifford + Wes McGee, 2015. (Photo © Matter Design)Industrial robots are generic machines, designed for high reliability as well as interoperability with other industrial equipment. But they are inherently custom tools also, requiring an integrative approach to software development, tooling, and material process. This capacity for customisation produced an ideal platform for a generation of designers and makers for which typical digital fabrication tools, like CNC routers and laser cutters, were not enough. Contemporary robotic fabrication in architecture follows two trajectories; the use of robotic tools in novel applications in prefabrication, and their use as on-site construction tools. Within both of these trajectories, a wide range of novel material processes are being explored at the scale of architecture. Real-time sensing and intelligent processes enable robotic tools to respond to both material and the environment, which is critical for the application of robotic tools on “unstructured” construction sites. The focus of our selection here is on organisations and projects that are near to or beginning commercial application.

-

![]()

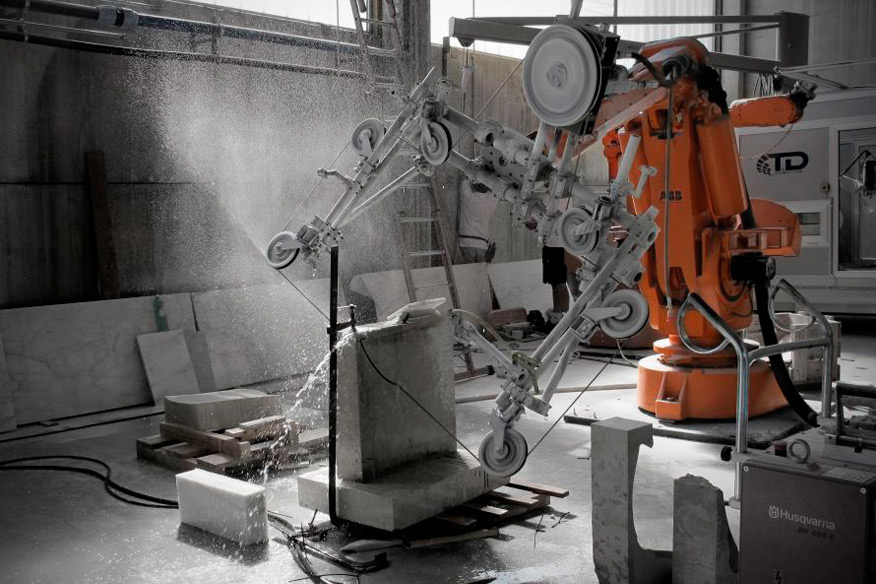

Asbjørn Søndergaard, Jelle Feringa and Anders Bundsgaard

—

Odico is a technology startup founded in 2012 and grew out of academic research at the Hyperbody TU Delft research group. It is based in Odense, Denmark and focuses on robotic fabrication at the scale of architecture. Complex formwork production for concrete casting typically requires extensive CNC milling. But Odico translated the concept of the ruled surface into a fabrication technique (robotic hotwire cutting) which means the time for machining can be reduced up to one hundred fold. Extending this further by utilising a diamond wire to cut stone produces entirely new forms in a fraction of the time needed by traditional techniques.

odico.dk![]()

ABB 6400 model with a custom end-effector. (Photo: Jelle Feringa, 2013) -

![]() Höweler + Yoon Architecture and Quarra Stone

Höweler + Yoon Architecture and Quarra Stone—

Quarra Stone has been fabricating stone for over 20 years and introduced robotic technology to their workflow a decade ago. More recently they have been taking on the production of complex geometries, notably the Sean Collier Memorial on the MIT campus by Howeller + Yoon Architecture. One of the keys to the realisation of this project was Quarra’s ability to work with the engineering and design teams. The architects, along with their consultants, developed a computational design tool to permit the nesting of massive components into a catalogue of quarried blocks, allowing real time design feedback for seam placement and fabrication constraints. Quarra’s extensive machining experience allowed them to achieve sub-millimetre accuracy on components weighing over five tons.

hyarchitecture.com![]()

The Sean Collier Memorial, 2014. (Photo © Iwan Baan) -

![]() ICD and ITKE, University of Stuttgart

ICD and ITKE, University of Stuttgart—

The Institute for Computational Design and the Institute of Building Structures and Structural Design at the University of Stuttgart are world renowned for their cutting edge research into architectural applications of robotic fabrication and biological construction. Their 2014-15 pavilion is based on the web construction process of water spiders. One particularly interesting development in this project is the use of real-time adaptive control of the robot, allowing the system to conform to the pneumatic formwork, which is constantly reacting to the build-up of the composite material on the surface. Through a highly integrated computational design and fabrication process the designers are able maximise material efficiency. The final shell covers an area of 40 square metres with only 260 kilogrammes of material.

icd.uni-stuttgart.de![]()

Pneumatic formwork with robotic fibre reinforcement (Photo: © ICD/ITKE University of Stuttgart) -

![]() Matthias Kohler & Fabio Gramazio and ETH Zurich

Matthias Kohler & Fabio Gramazio and ETH Zurich—

The work of Matthias Kohler and Fabio Gramazio has arguably inspired a generation of architectural researchers to apply industrial robotics to building-scale fabrication. While they are perhaps best known for their work on parametrically designed brick facades, they have also completed an extensive range of research and design projects at the Chair of Digital Fabrication at ETH Zurich. One of their most far-reaching applications focuses on a multidisciplinary approach to in situ fabrication involving architects, robotic engineers and machine integrators. In May 2015 they presented an autonomous construction robot prototype at NCCR Digital Fabrication. Whilst it is still likely to be a few years before such fabrication equipment appears directly on the construction site, the application of real-time sensing and vision to fabrication problems has immediate benefits in a wide range of prefabrication applications.

![]()

gramaziokohler.arch.ethz.ch![]()

Building Strategies for on-site Robotic Construction, Gramazio Kohler Research and Agile & Dexterous Robotics Lab, NCCR Digital Fabrication, ETH Zurich, 2015. (Film: ETH Zurich)

-

Forever Alone

Self-portrait image from a rover robot named Curiosity, currently exploring and collecting data on climate and geology on Mars in preparation for a further planned rover mission there in 2020. Shot in the Gale Crater, October 31, 2012, shortly before its mission was extended indefinitely. I (sl)

Photo: NASA

-

In the Photo Booth with ...

Katarzyna KrakowiakPolish artist Katarzyna Krakowiak is interested in uncovering the limits of architectural space. Known particularly for working with sound, she often uses it as a medium to explore monumental spaces as her installation Making the walls quake as if they were dilating with the secret knowledge of great powers in the Polish pavilion at the 2012 Venice Biennale demonstrated. She was recently in Berlin for her show Out of Tune at the Polish Institute, and spoke to Fiona Shipwright about concert halls, junkspace and her developing interest in sounding out the city.

Interview and photos by Fiona Shipwright![]()

-

You work a lot with sound, a medium that defies gravity, whilst simultaneously working in some very “heavy” spaces, physically and culturally, such as the Polish Pavilion in Venice. What is it you like about the medium?

I love the feeling that I’m taking over a space. I remember the moment when I was working inside the huge interior of a water tower and realised that by using sound I could take over a whole building without constructing anything physical. I think this idea of “taking over” is also connected to how I’m slowly moving towards urbanism in my work.

In 2013 whilst I was installing a sound piece at Penn Station Post Office in New York, I came to study how Manhattan developed from farms into streets. For me, scale always comes back to the human but I’m interested in understanding what’s going on in between. With urbanism I don’t believe in a division between outside and inside, it’s more a situation without borders – which sound can transcend. -

Your show “Out of Tune” in Berlin references Rem Koolhaas’ concept of “junkspace” – the architectural debris that spills into the voids of the contemporary city and makes up its fabric. What is it about this idea that is attractive to you?

Recently I’ve been working with recordings of a single voice and considering the individual perspective. I think it’s important to be aware of the imbalance between a single voice and the city. You have a situation where the voice is trying to tune to the city but there is no communication between the two. It’s a one-way exchange but that is not necessarily a bad thing. This is our normal condition: we want to tune into, be part of something. I’ve also been working with 3D printing which I actually think generates real “junkspace”. You can print anything you want but the problem is that it produces objects – and I think that today it’s no longer about the object.

In such an image-dominated field, do you feel that communicating architectural spaces using sound can be a more arresting, effective method?That aspect of the work is very important to me. The documentation photographs of the Polish Pavilion piece show how people really “exist” in space when they listen. They don’t really speak to each other; it’s a very individual experience. I think my work is not so much about communicating but more about understanding that we are in fact not communicating with each other. I feel this is a very important issue for architecture and is partly why I chose to present my work at the architecture, not the art, biennale.

Within an architectural context, sound is often just treated as an acoustic, problematic issue. Even the way concert hall spaces are designed for the perfect sound – it is so boring. In Poland this amazing concert hall was built recently and of course it’s a beautiful space architecturally but is the fact that its sound is “better” than other concert halls really the only thing anyone wants to talk about in terms of its acoustics? -

Katarzyna Krakowiak (1980) is a sculptor, who uses sound to

explore the limits of architecture. She creates large-scale sound

sculptures involving existing buildings, often applied at 1:1

scale. Krakowiak graduated from the Academy of Fine Arts, Poznan in

2006, and was awarded her PhD, supervised by Miroslaw Balka, at the

Academy of Fine Arts, Warsaw in 2013. Making the walls quake as if

they were dilating with the secret knowledge of great powers received

an Honourable Mention at the Venice Architecture Biennale in 2012.

krakowiak.hmfactory.com

Do you mean Szczecin Philharmonic Hall – which won the 2015 Mies van der Rohe Award?

Yes – I really love its surprising gold, kitschy heart. But the powerful sense of the building doesn’t come from its “perfect” sound. I think we should try to create more individual sounding spaces for both noise and silence. Try to imagine that you hear familiar music up in the Himalayans – because of the pressure there, the conditions, you experience it in a new way. It’s why I’m interested in the idea of junkspace but not only from the perspective Koolhaas proposed; I want to talk to people about all their experiences of different conditions and situations. I

-

![]()

-

![]()

Issue No. 37:

October 1st 2015

Image © Zaha Hadid Architects

-

Search

-

FIND PRODUCTS

PRODUCT GROUP

- Building Materials

- Building Panels

- Building technology

- Façade

- Fittings

- Heating, Cooling, Ventilation

- Interior

- Roof

- Sanitary facilities

MANUFACTURER

- 3A Composites

- Alape

- Armstrong

- Caparol

- Eternit

- FSB

- Gira

- Hagemeister

- JUNG

- Kaldewei

- Lamberts

- Leicht

- Solarlux

- Steininger Designers

- Stiebel Eltron

- Velux

- Warema

- Wilkhahn

-

Follow Us

Tumblr

New and existing Tumblr users can connect with uncube and share our visual diary.

»Architecture starts when you carefully put two bricks together. There it begins.«

Ludwig Mies van der Rohe

Keyboard Shortcuts

- Supermenu

- Skip Articles

- Turn Pages

- Contents

(

(

The Sequential Roof Production Facility ERNE AG Holzbau, Gramazio Kohler Research, ETH Zurich, 2015.

The Sequential Roof Production Facility ERNE AG Holzbau, Gramazio Kohler Research, ETH Zurich, 2015.